About me

I build DevOps pipelines that streamline the software development life cycle and deliver scalable, reliable solutions.

I have a deep curiosity for how systems work and how they can be optimized. With years of experience across DevOps, automation, and cloud architecture, I've grown into a versatile problem-solver who thrives at the intersection of engineering and strategy.

What drives me is the pursuit of elegant, scalable solutions that make life easier for developers, teams, and users. Whether it's designing resilient infrastructures, improving CI/CD pipelines, or leading initiatives around best practices, I bring a mix of technical depth and a strong sense of ownership.

Outside the command line, I’m a lifelong learner who enjoys exploring new technologies, mentoring others, and constantly rethinking how we build and ship software.

I began learning to code and building systems well before the rise of generative AI, becoming proficient in seven programming languages before it emerged. At the same time, I’ve been an early adopter of AI tools, integrating them into my workflow to boost both productivity and creativity. I also leverage these tools to accelerate learning, explore emerging technologies, and spark new ideas.

Beyond just using AI tools, I’ve taken a deep dive into how they work under the hood, gaining hands-on experience integrating and self-hosting them. I’ve also explored foundational concepts in emerging fields like quantum computing, always staying curious and proactive. I'm ready for the age of AI and prepared to embrace any technological revolution that lies ahead!

Professional Experience

Software Engineer / DevOps Lead

WasteFlow

Jul - Sept 2024 / Oct 2024 - Present

Lausanne, CH

Software Engineer / DevOps Lead

WasteFlow

Jul - Sept 2024 / Oct 2024 - Present

Lausanne, CH

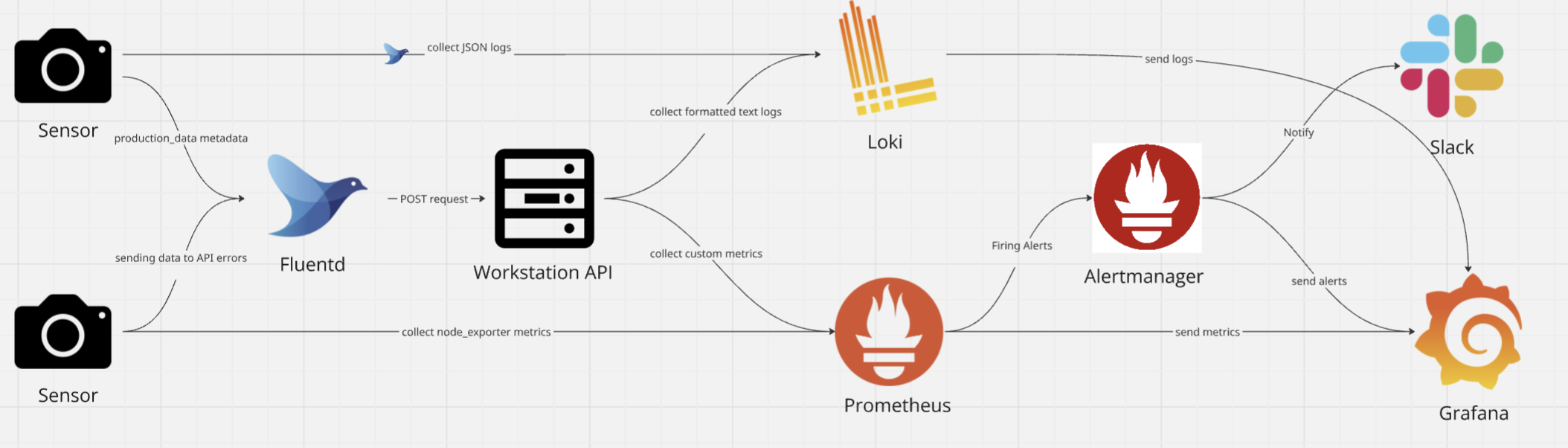

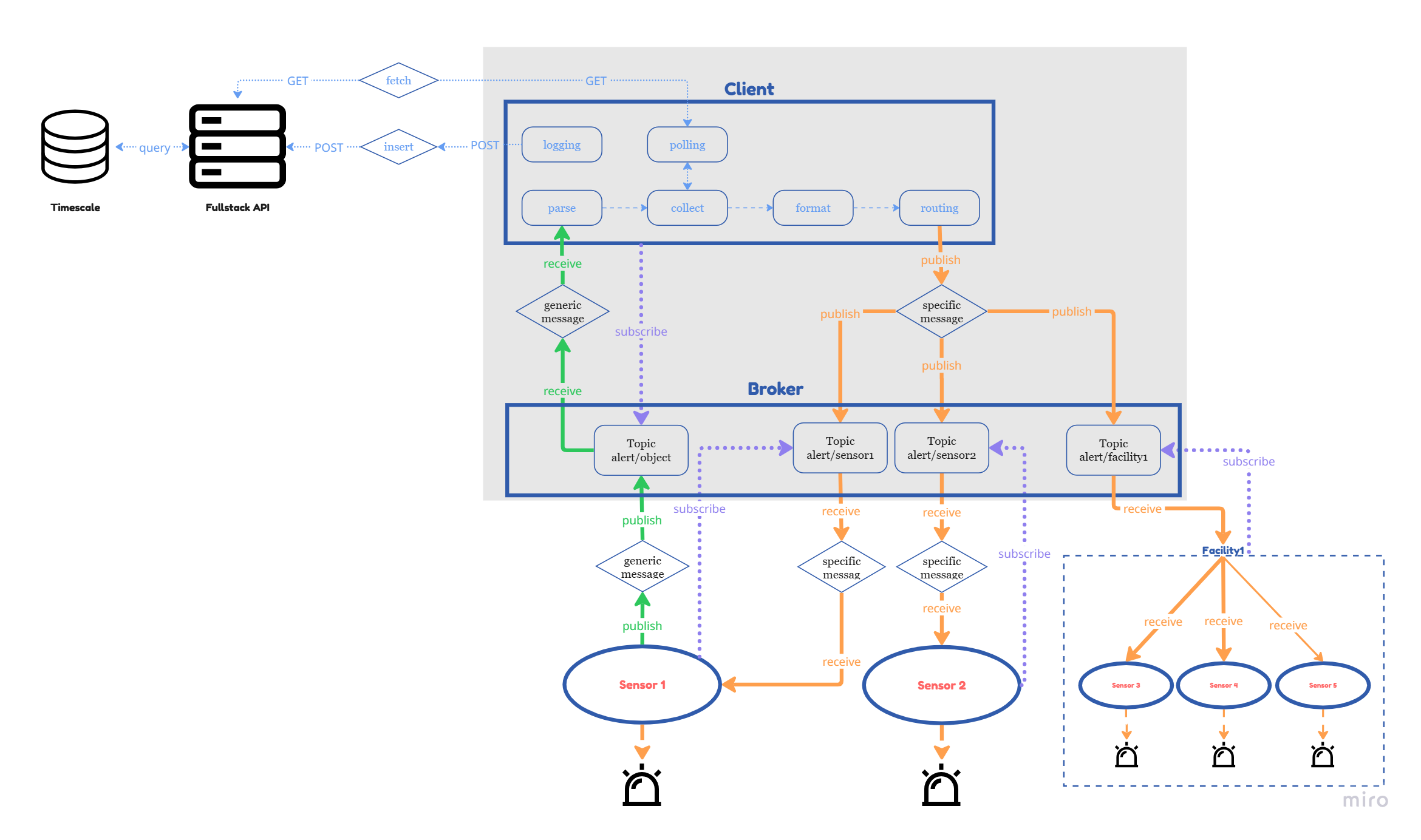

I currently lead all DevOps efforts, overseeing CI/CD pipelines, automated deployments across cloud and Tailscale-connected hosts, as well as monitoring, logging, and alerting systems. I ensure security and compliance across our infrastructure, implement automation to streamline operations, and drive performance optimization across the stack.

I’m responsible for managing the full SDLC across multiple teams, configuring and maintaining infrastructure as code, and supporting the deployment and maintenance of computer vision systems at industrial waste sorting facilities. This includes complex setup and configuration of edge devices—covering hardware drivers, networking, permissions, and credential management—ensuring secure and reliable connectivity to our cloud infrastructure.

Responsibilities

- Build CI/CD pipelines to streamline operations

- Ensured system reliability with observability

- Overseeing the whole software development lifecycle

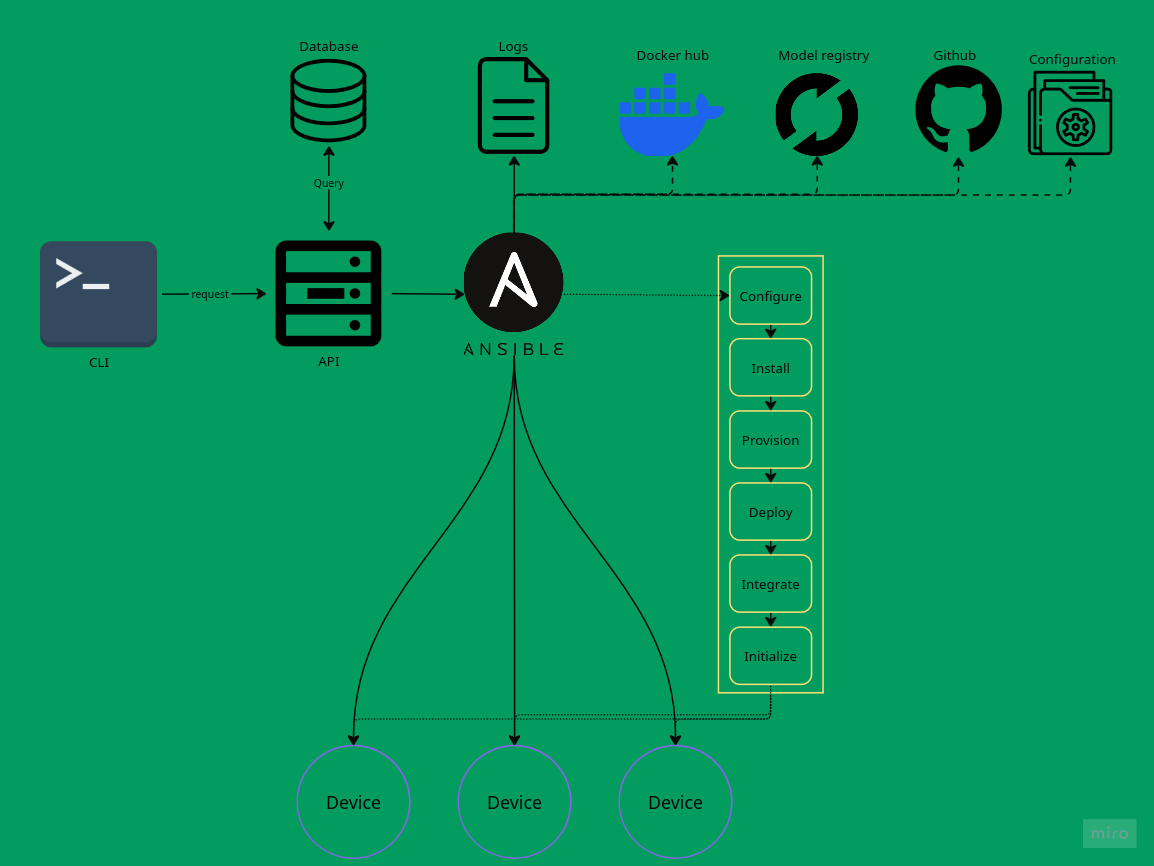

- Automating provisioning and configuration of devices

- Managed secure network connectivity

- Configured and maintained infrastructure as code for cloud and edge devices

Tech Stack

Project Gallery

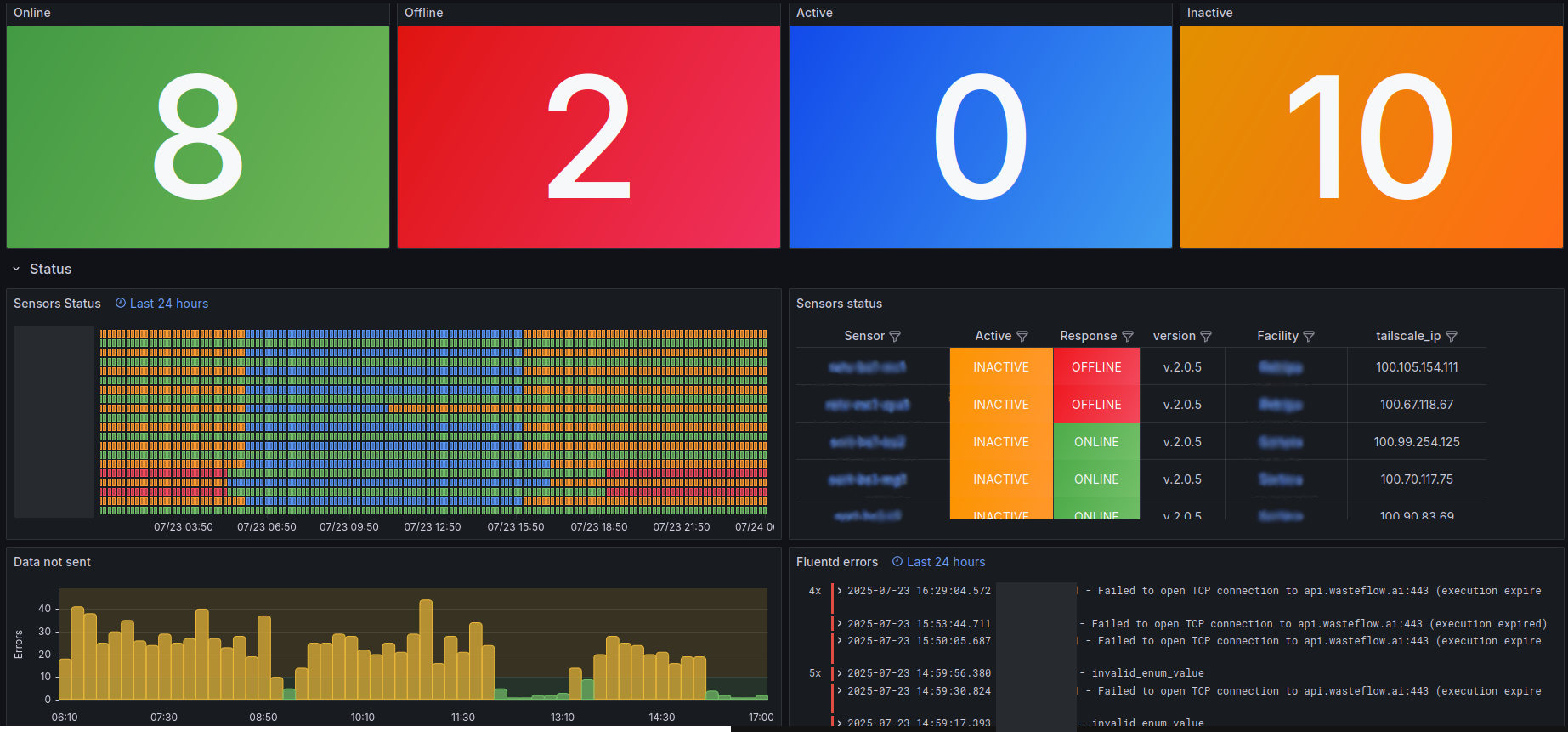

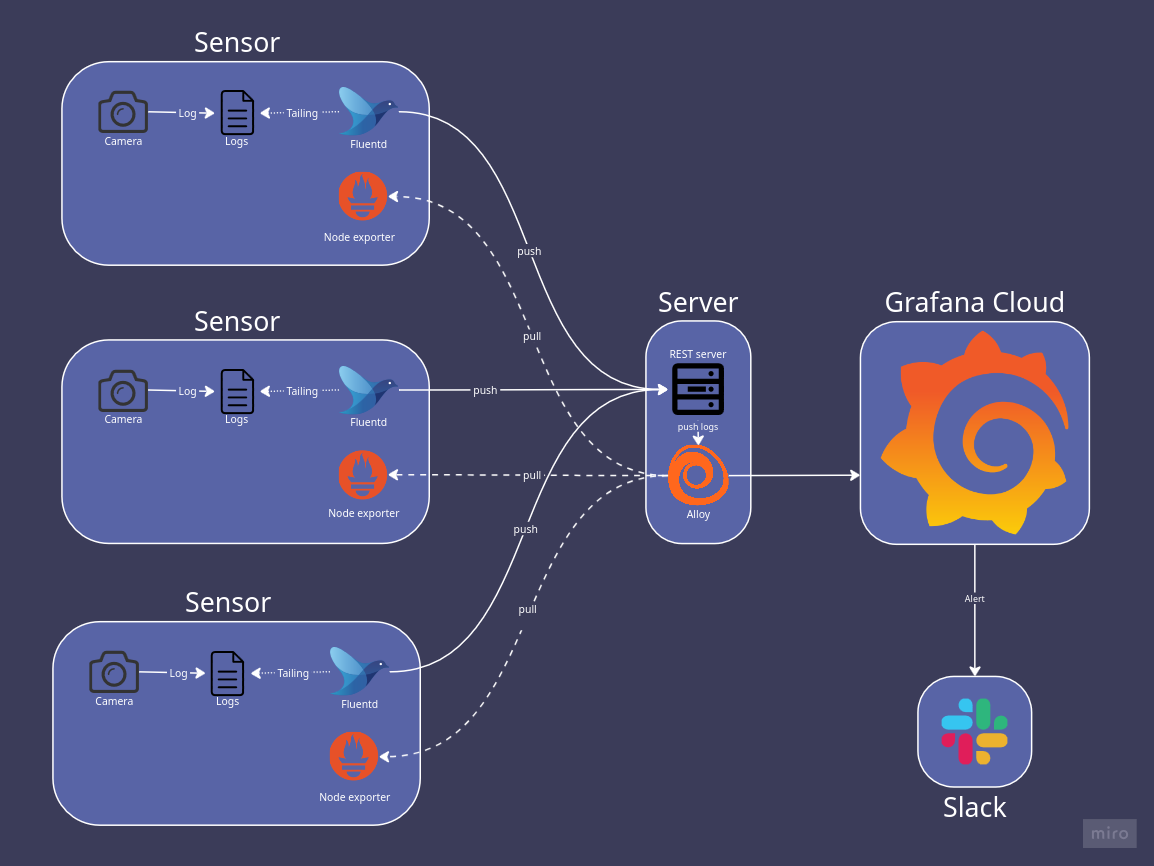

Real-time monitoring of waste sensor statuses

Infrastructure performance and historical data analysis

Complete Grafana stack architecture design

Multi-source alerts with physical, email, SMS and dashboard targets

Intern in AI specializing in LLM

Innovatim Sarl

April 2024 - June 2024

Neuchâtel, CH

Intern in AI specializing in LLM

Innovatim Sarl

April 2024 - June 2024

Neuchâtel, CH

I led the DevOps and MLOps infrastructure for a generative AI–powered recruitment platform. I self-hosted and deployed the platform and supporting LLMs on Infomaniak’s GPU-enabled cloud infrastructure. My responsibilities included managing containerization, orchestration, model deployment, GPU resource allocation, and ensuring reliable uptime and scalability for both the backend services and AI workloads.

Responsibilities

- Deployment in cloud, reliable and scalable

- Recruitment platform with RAG and LLM on GPU

- Data embedding, with vector database

- Managed containerization and orchestration for AI workloads

Tech Stack

Project Gallery

Dashboard powered by LLMs for recruitment

Generate job descriptions, analyze resumes, and more

Recruiter dashboard and workflow interface

Administrative controls and system management

Software Development Intern

Federal Office of Meteorology and Climatology MeteoSwiss

May 2023 - November 2023

Geneva, CH

Software Development Intern

Federal Office of Meteorology and Climatology MeteoSwiss

May 2023 - November 2023

Geneva, CH

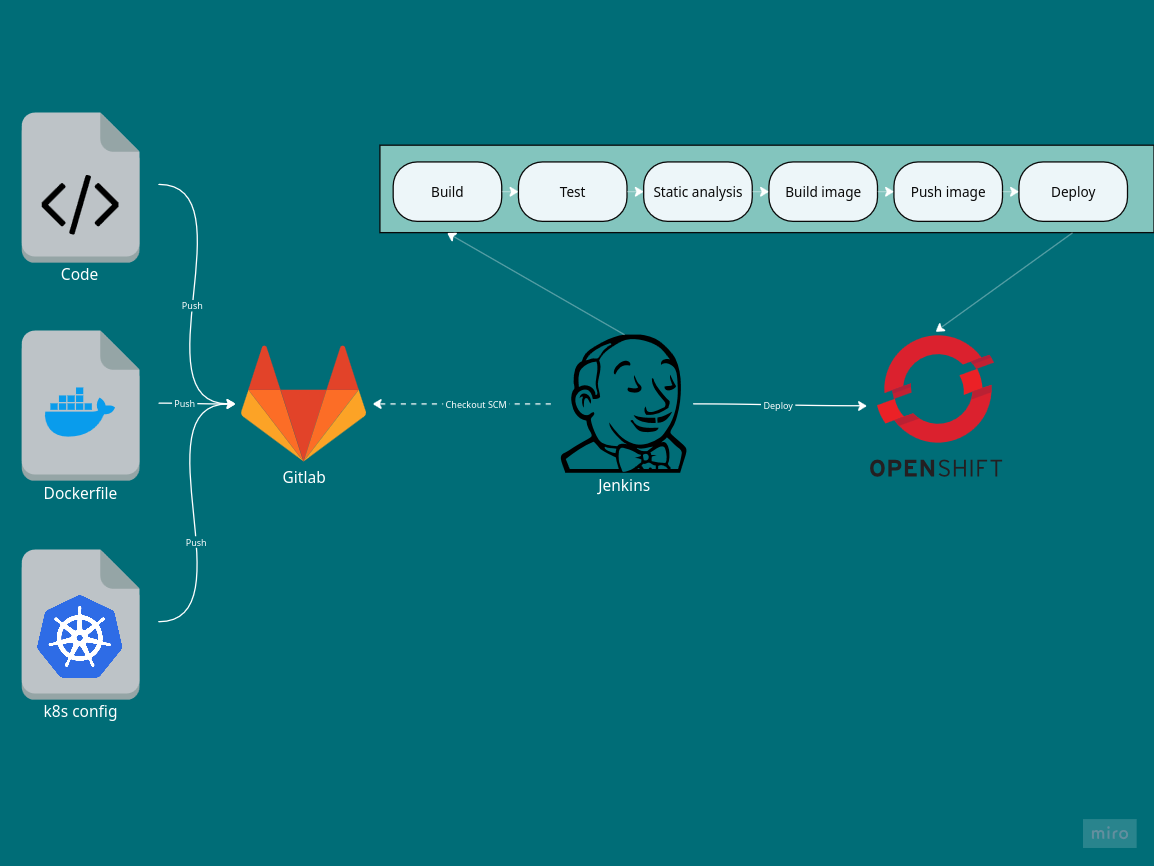

Contributed to weather forecasting systems by modernizing Python tools and building containerized APIs to improve deployment workflows. Created the first software in the healthtools domain, which involved setting up and providing configurations for the CI/CD processes. Gained hands-on experience using Docker, Kubernetes, and OpenShift for containerization and orchestration. Followed best practices with GitLab CI/CD, Jenkins, and leveraged monitoring tools like Prometheus, Grafana, and Kibana to ensure system reliability and observability throughout the development lifecycle.

Responsibilities

- Automated weather forecast report generation and delivery

- Containerized Python tools as scalable APIs

- Supported CI/CD pipelines enabling zero-downtime deployments

- Handled log aggregation and monitoring to ensure system reliability

Tech Stack

Project Gallery

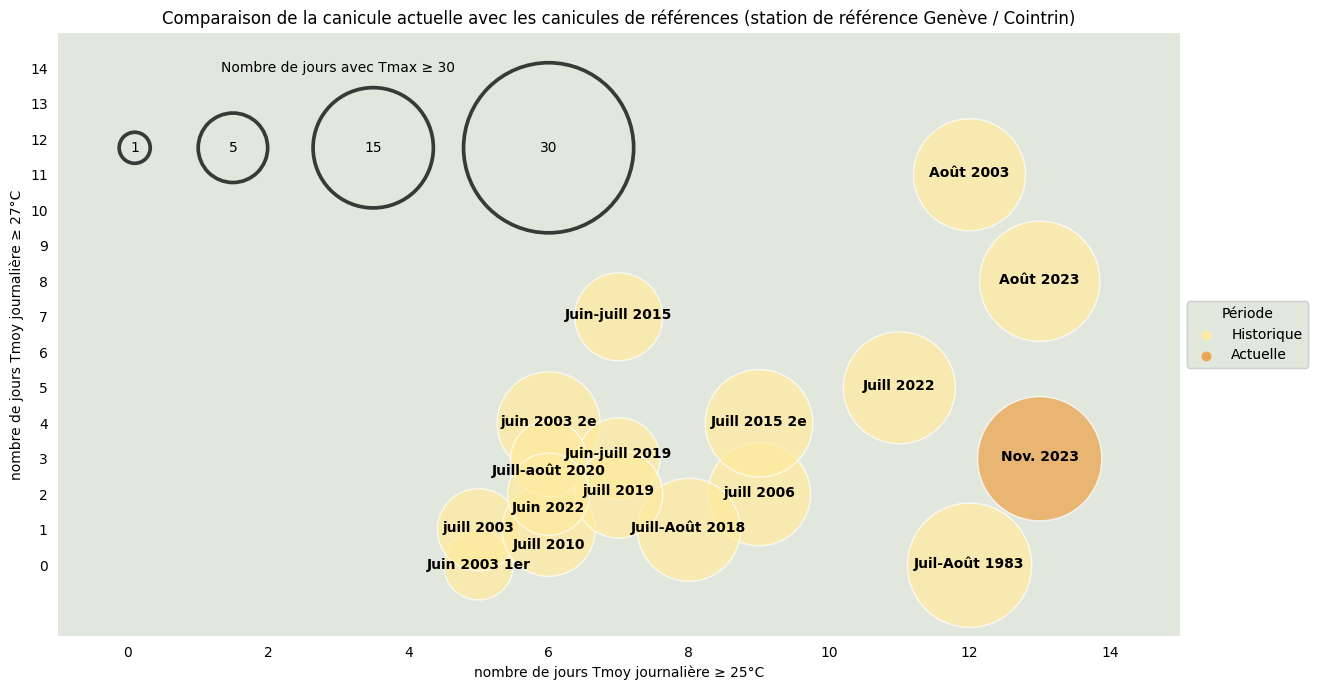

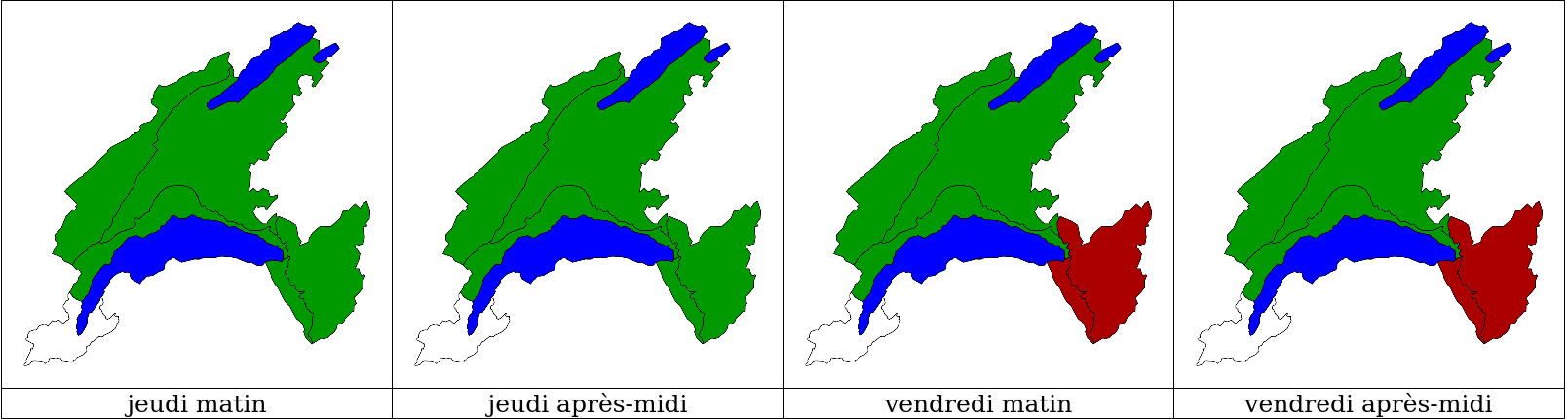

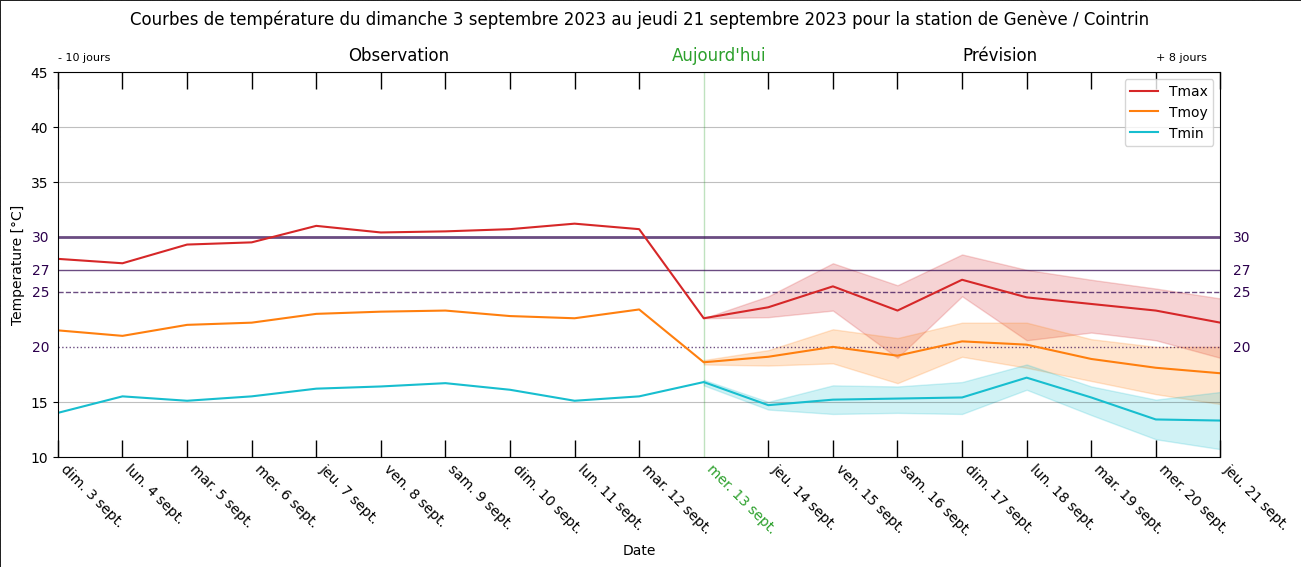

Interactive data visualization

Geographical weather mapping

Temperature forecasting system

Featured Projects

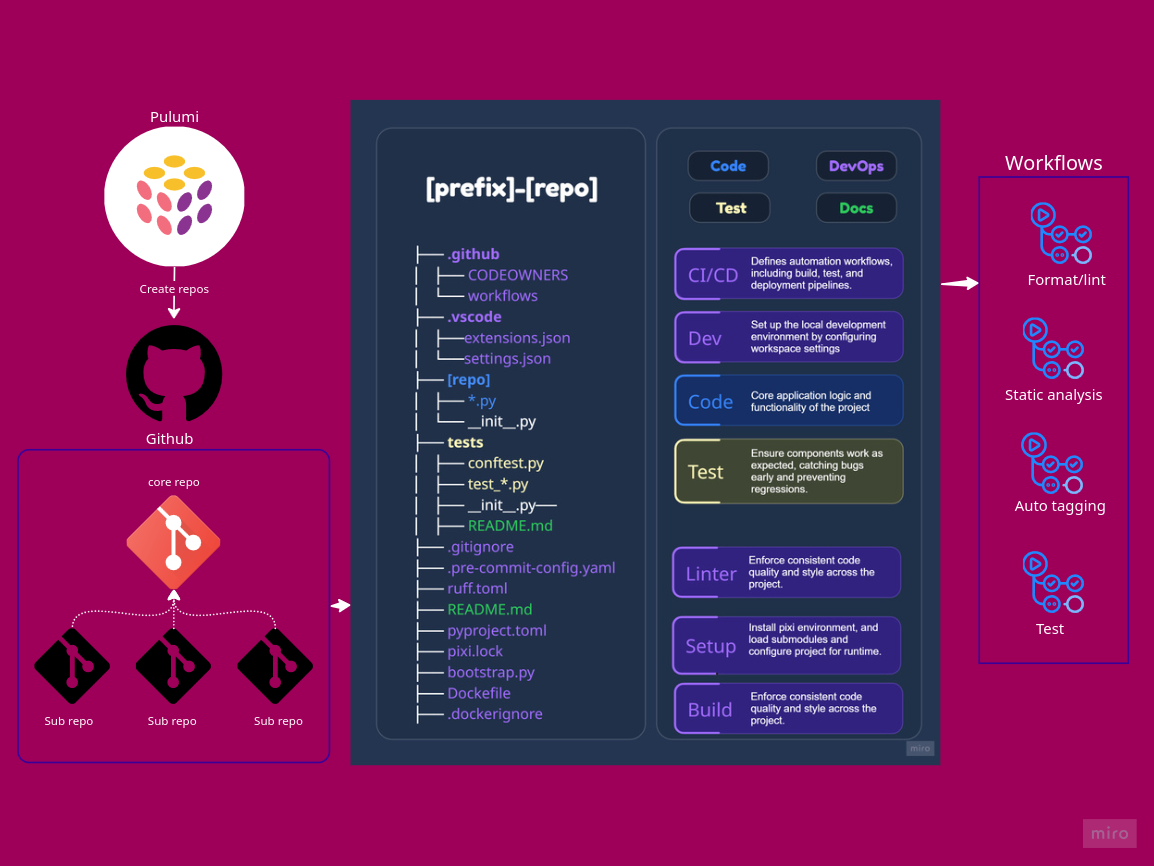

Automated Repos Deployment

Infrastructure-as-code setup for GitHub ML repositories using Pulumi. Core and sub repos are provisioned automatically. Environment is setup via a script that installs both binaries and Python dependencies.

Self-Hosted CI/CD Pipeline

Comprehensive fully open-source CI/CD platform built from scratch for microservices architecture. Features automated testing pipelines, security scanning, containerized deployments, and full GitOps workflow integration.

Grafana Stack Monitoring

Distributed monitoring platform for network of remote sensors, combining metrics and logs collection, enrichment, and visualization. Designed for unified observability with real-time dashboards and proactive alerting.

Automated Sensor Provisioning

End-to-end automation for provisioning and deploying remote sensors. A CLI command triggers an API server, which gathers device and configuration data from the database. Necessary files are pulled from multiple sources.